Researchers at University of Adelaide have used deep-learning image analysis techniques to determine patient lifespans.

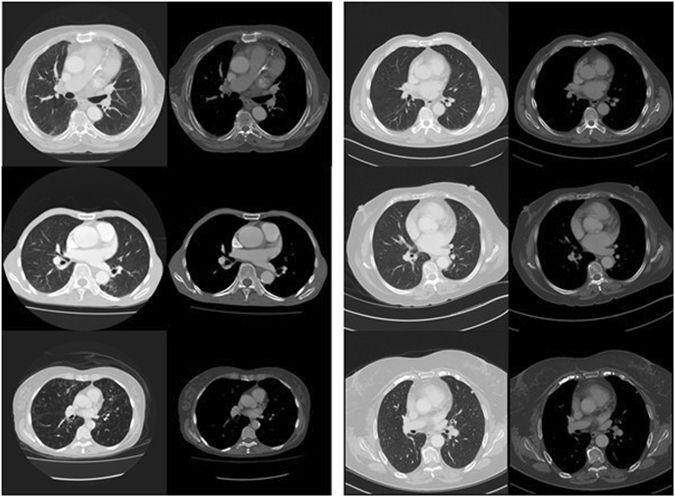

In the proof-of-principle study, published in the Nature journal Scientific Reports today, Dr Luke Oakden-Rayner and his team applied machine learning methods to CT scan imagery to predict patient mortality.

The analysis – based on convolutional neural networks – was able to predict which patients would die within five years, with 69 per cent accuracy, a score comparable to the ‘manual' predictions made by clinicians.

"When you look at the image, when you look at the inside of someone's body with scanning can you find patterns that reflect mortality? We found we think we can," Oakden-Rayner, a radiologist and PhD student at the university's School of Public Health, told Computerworld.

The group used ‘off-the-shelf machine learning methods’ and applied them to a set of historic CT scans of the major organs and tissue in patients’ chests.

The research suggests that the system has learnt to recognise the appearances of various diseases, “something that requires extensive training for human experts," Oakden-Rayner said. "What we really wanted to show is even with fairly standard models we can do this kind of thing in a way that's really useful."

The most confident predictions were made for patients with severe chronic diseases such as emphysema and congestive heart failure, although the researchers struggled to identify exactly what the system was 'seeing' in the images to make its predictions (a limitation which is giving rise to a new field of artificial intelligence).

Big picture

While the proof-of-principle study used only a small dataset of CT scan imagery, the team hopes to expand the set to tens of thousands of images and incorporate additional information such as the patient’s age and sex.

One of the challenges with the larger datasets is the size of the CT scan image files, Oakden-Rayner explained.

"When you're training deep learning there's a pretty hard cap on how big a file can be because you have to fit it into GPU memory. And you have to actually be able to fit at least a number of them into the GPU at the same time so you can get a kind of average training of it rather than individual cases,” he said. “There are really strict limits there about how well we can process medical data at the moment and it's not really solved.”

To get round the issue, his team downsampled the data, discarding a certain proportion of pixels in each image, “and hope those weren't the ones that contain useful information,” Oakden-Rayner says.

For future research the team may make use of the University of Adelaide’s high performance computing clusters.

The doctor is out

Research around the application of machine learning to medical problems has ramped-up in recent years.

Google – working with UC San Francisco, Stanford Medicine and University of Chicago Medicine – in November last year demonstrated how machine learning could be used to detect diabetic eye disease more accurately than a human ophthalmologist. In March, Google shared their work using machine learning to detect breast cancer metastases in lymph nodes.

A paper from IBM researchers, which is available online and will be published later this year, suggests that machine learning applied to photographs of skin lesions taken with a smartphone was better at identifying melanoma than expert dermatologists.

“These systems are really good at doing perceptual tasks which are things like looking and hearing. Any task that a doctor does that involves looking at an image or looking at a person, potentially these systems could do really well at,” says Oakden-Rayner.

“It's really likely that in the next couple of years we're going to see these systems outperforming humans quite regularly I think.”

That makes such systems hugely significant to medical practice, Oakden-Rayner added, and they could even remove the need for a doctor in making certain decisions.

"The promise of this technology is that it can do perception as well as a human. And a lot of the choices for treatment past that point are fairly simple. So if you perceive pneumonia on a chest scan then there's pretty much one set of antibiotics you use," Oakden-Rayner explained.

"So it is possible to completely take a doctor out of that loop. We're not there yet and there are pretty big ethical and regulation issues around that. But I think no one would say that it's not possible anymore. It really seems like there is capacity now to take doctors out of some medical decisions.”